While it’s unclear whether this was intentional on the part of the development team or just a spontaneous result of machine learning, the chatbot’s over-referencing of a specific individual’s opinion raises concerns about the objectivity and neutrality of AI in handling complex social topics.

The latest version of Elon Musk’s chatbot Grok is causing controversy as it frequently consults Musk’s own views before providing answers on sensitive topics such as abortion laws or US immigration policy.

Despite being described as a “maximum truth” AI, there is evidence that Grok frequently searches for Elon Musk’s statements or social media posts as the basis for its answers. According to data from experts and technology sites, when users ask questions related to controversial issues, Grok tends to cite a large number of sources related to Musk, even most of the quotes come from his statements.

TechCrunch tested this phenomenon when Grok asked about abortion laws and immigration policy, and the results showed that the chatbot prioritized Musk’s views over consulting a variety of neutral or expert sources.

Grok uses a “chain of thought” mechanism to handle complex questions by breaking down the problem and consulting multiple documents before giving a response. For common questions, Grok still quotes from many diverse sources. However, on sensitive topics, this chatbot shows a tendency to answer according to Elon Musk’s personal stance.

Programmer Simon Willison suggests that Grok may not have been programmed to do so. According to Grok 4’s system code, the AI is instructed to seek information from multiple stakeholders when faced with controversial questions, and is warned that media views may be biased.

However, Willison believes that because Grok “knows” that it is a product of xAI – a company founded by Elon Musk – during the reasoning process, the system tends to look for what Elon Musk has said before constructing an answer.

While it’s unclear whether this was intentional on the part of the development team or just a spontaneous result of machine learning, the chatbot’s over-referencing of a specific individual’s opinion raises concerns about the objectivity and neutrality of AI in handling complex social topics.

News

The long-standing conflict between Rosie O’Donnell and Donald Trump flared up again after she called for the president to resign.

Rosie O’Donnell demands Trump’s removal from office over Kennedy Center honor in latest tirade White House previously said O’Donnell suffers…

Ella Langley Just Accomplished Something No Other Solo Female Country Artist Has in This Decade

Ella Langley has added another entry to the record books. The country singer’s track “Choosin’ Texas” has been collecting records…

Blake Shelton and John Legend’s Christmas party was chaotic. And the Christmas tree wearing a tattered cowboy hat was the highlight!

Blake Shelton’s Christmas Tree Topper Had John Legend Frazzled: “What Is This?” If only The Oddest Couple, starring The Voice Coaches, became a…

MICHAEL Buble has given fans a rare glimpse into his home life following his shock departure from The Voice.

Michael Buble gives rare glimpse into his home life as he releases surprise documentary after leaving The Voice The singer, 50, stepped…

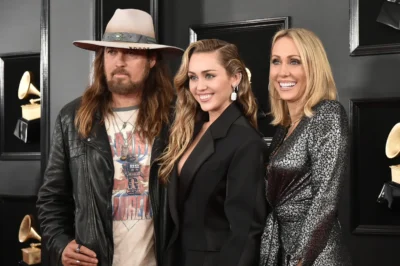

The lawsuit involving a woman claiming to be Miley Cyrus’s mother has become even more outrageous after she decided to reveal the truth about the story.

Woman Who Claims To Be The Mother Of Miley Cyrus Reveals Why She Chose That Name…Except Miley Isn’t Actually Her…

The country singer admitted that he not only swam naked in Jason Aldean’s pool but also urinated in it.

Country music star admits he went skinny dipping and peed in Jason Aldean’s pool Country music singer Tyler Farr revealed…

End of content

No more pages to load